|

Daejin KimI am an M.S student at DAVIAN Lab (Advisor: Jaegul Choo), part of the KAIST AI at Korea Advanced Institute of Science and Technology. I am currently working on computer vision and time series forecasting. Most recently, I have been interested in finding ways to guarantee the faithfulness of the visual explanation in deep-learning-based models.

Email: kiddj@kaist.ac.kr |

Publications(C: peer-reviewed conference, J: peer-reviewed journal, * = equal contributions) |

|

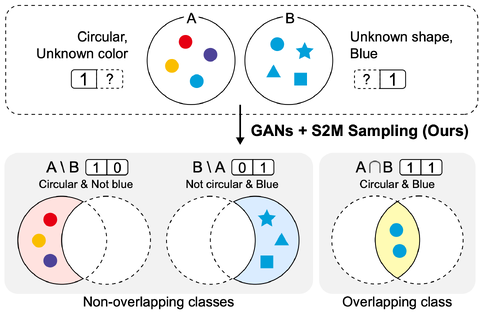

[C4] Mining Multi-Label Samples from Single Positive LabelsYoungin Cho*, Daejin Kim*, Mohammad Azam Khan and Jaegul Choo Accepted at NeurIPS 2022 [Paper] · Propose a novel way to draw samples of joint classes (e.g., 𝐴 ∩ 𝐵) using only single positive labels (e.g., 𝐴, 𝐵). |

|

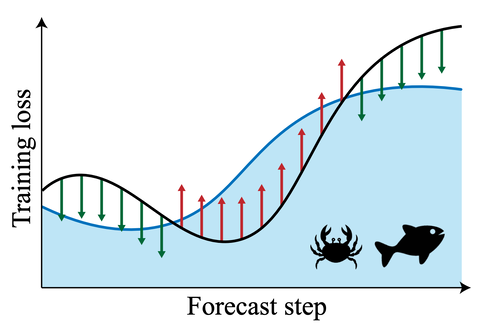

[C3] WaveBound: Dynamic Error Bounds for Stable Time Series ForecastingYoungin Cho*, Daejin Kim*, Dongmin Kim, Mohammad Azam Khan, and Jaegul Choo Accepted at NeurIPS 2022 [Paper] [Presentation] · Introduce the dynamic error bounds to address the overfitting issue in time series forecasting. |

|

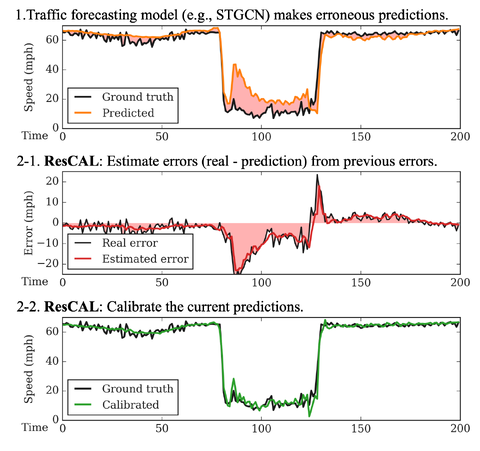

[C2] Residual Correction in Real-Time Traffic ForecastingDaejin Kim*, Youngin Cho*, Dongmin Kim, Cheonbok Park, and Jaegul Choo Accepted at CIKM 2022 [Paper] [Slides] [Presentation] · Identify that recent deep-learning-based traffic forecasting methods does not handle the residual autocorrelation. |

|

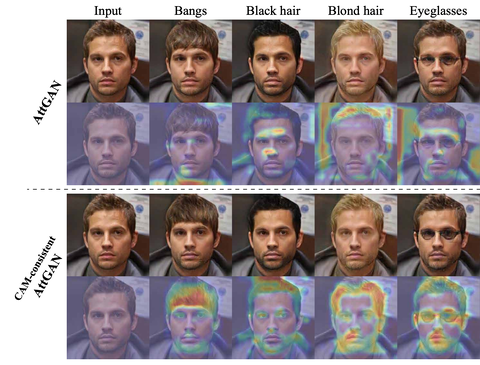

[C1] Not just Compete, but Collaborate: Local Image-to-Image Translation via Cooperative Mask PredictionDaejin Kim, Mohammad Azam Khan, and Jaegul Choo Accepted at CVPR 2021 [Paper] [Poster] [Presentation] · Improve the existing face editing methods by preserving the attribute-irrelevant regions using Grad-CAM. |

Unpublished Work / Projects |

|

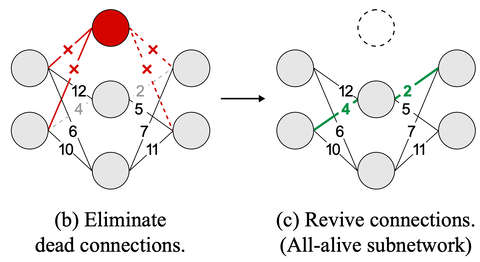

Your Lottery Ticket is Damaged: Towards All-Alive Pruning for Extremely Sparse NetworksDaejin Kim, Minsoo Kim, Hyunjung Shim, and Jongwuk Lee Apr, 2021 · Explicitly handle the useless weights occurred by existing saliency-based pruning methods. |

|

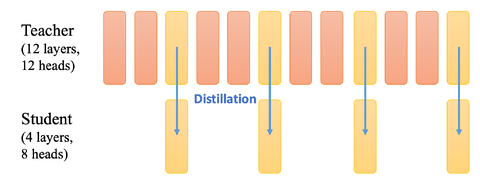

Knowledge Distillation in BERTDaejin Kim Aug, 2019 · Implement a smaller version of BERT (with 4 layers and 8 attention heads) by using knowledge distillation. |

|

Editable Text-Adaptive GANDaejin Kim Mar, 2019 [Slides] · Inspired by Text-Adaptive GAN and Editable GAN, propose a single GAN that can generate and manipulate images simultaneously for the given text prompt. |

Experience |

NAVER WEBTOON Corp.Internship at NAVER WEBTOON AI Research Lab Nov, 2022 - Current |

DAVIAN, KAIST AI (Advisor: Jaegul Choo)Masters Student Mar, 2021 - Current |

DIALLab, Sungkyunkwan University (Advisor: Jongwuk Lee)Undergraduate Research Assistant Jan, 2020 - Feb, 2021 |

Purdue UniversitySoftware Engineer Sep, 2019 - Dec, 2019 Participate in the IITP Purdue Capstone program at Purdue College of Information Technology, sponsored by the Korean IITP (Institute of Information & Communications Technology Planning & Evaluation). |

Hanbom High SchoolPython & Machine Learning and Big Data Analytics Lecturer May, 2018 - May, 2019 |

Awards & Certifications |

Dean's ListSungkyunkwan University Aug, 2020 / Aug, 2019 / Mar, 2019 / Aug, 2018 / Mar, 2018 / Aug, 2017 |

First place at KAIST AI World Cup AI Commentator SessionKorea Advanced Institute of Science and Technology Dec, 2019 [News Release] |

|

Design and source code from here |